CCF (Core Computational Facility) @ UQ run by ITS / SMP

[Home] [why HPC?] [UQ Resources] [getafix] [dogmatix] [asterix] [ghost] [contacts/help]

howto: [data] [Slurm] [OpenMP/MKL] [Nvidia GPU] [Intel Phi] [MPI] [matlab] [mathematica] [FAQ]

asterix partition

Asterix compute nodes are part of the getafix cluster in a partition called asterix in the slurm queuing system.

Compiling CUDA code

The highest CUDA version available on the asterix nodes 8.0 however other versions are also available for compatitibilty with older code from asterix and ghost. You'll need to load the appropriate cuda module first:

module load cuda/6.0

module load cuda/6.5

module load cuda/7.5

module load cuda/8.0

To ensure that your code is compiled for the Fermi architecture of the asterix GPUs

within the cluster you can use the compiler flag -arch sm_20.

There are also many other compiler switches available: run nvcc -h or

see NVIDIA's documentation at

http://developer.nvidia.com/nvidia-gpu-computing-documentation .

Submitting jobs

Asterix jobs should be submitted to the SLURM queue manager by adding -p asterix to your submission command or adding

this to your submission script:

#SBATCH --partition asterix

See the Nvidia GPU howto page for general help about submitting GPU jobs.

Specify the number of GPUs required for your job with:

#SBATCH --gres=gpu:n

Specify the type of GPU you want to use (e.g. 2070 or 2050) with:

#SBATCH --constraint=2070

To run on the large memory node:

#SBATCH --mem=90G

Example submission script:

#!/bin/bash

#

#!/bin/bash

#SBATCH --partition asterix

#SBATCH --gres=gpu:1

#SBATCH --mem=1G

#SBATCH --time=05:00:00

module load cuda

srun cudademo

Asterix Information

asterix was purchased in 2011 by SMP as a GPU-specific cluster but also has a 2 x 4 core CPUs/node.

- Bought with UQ Major Equipment and Infrastructure Grant $193K ex-GST inc. $70K matching Science/SMP funds (Investigators: included Anthony Roberts, Matthew Davis, etc).

- One whole Rack... Xenon Nitro T5 with 36 GPUs (33 still operational at 16 May 2017).

- Has a dedicated master node running SGE queuing system. Has NFS-disks off the master node.

- 120 CPU cores on 15 compute nodes each with 2 x 4 core Intel X5650 @ 2.40GHz (2010)

- Total of 14 x Nvidia Tesla C2070 (max 2 / node, 6GB RAM)

- Total of 22 x Nvidia Tesla C2050 (max 4 / node, 3GB RAM)

- Located in Parnell chill-out room 7-127.

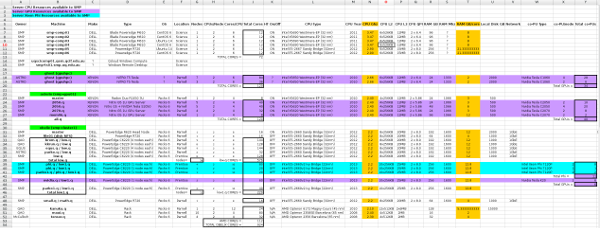

or click on the image below for a high-res version

System status

You can see the CPU loads on the cluster via the webpage

http://faculty-cluster.hpc.net.uq.edu.au/ganglia/

This page can only be accessed from a computer within the UQ domain

(or after starting a UQ VPN session)

This system is out of warranty.

It is having some reported issues with overheating when all the GPUs are cranking.

It has been observed that some GPUs are failing before others.

Compare the specs of GPUs on c-0-0 with c-3-0 (using nvidia-smi command),

as you can see the fan speed is not available for c-2-x

and c-3-x GPUs and they have a higher temperature on average.

System documentation

asterix system documentation originally written by Vivien Challis can be found below. Also see the Nvidia GPU howto page.

Slave node types

There are 15 compute nodes in the asterix partition. These have a systematic naming scheme,

so that you can know what GPUs the node has based on its name. The constraints, node names, RAM and GPU configurations are:

- 2050 machines a-0-0 to a-0-4 have 48GB RAM and 1 or 2 x C2050 GPU

- 2070 machines a-1-0 has 96GB RAM and 2 x C2070 GPU

- 2070 machines a-2-0 to a-2-5 have 48GB RAM and 1 or 2 x C2070 GPU

- 2050 machines a-3-0 to a-3-2 have 48GB RAM and 3 or 4 x S2050 GPU

The 2050 GPUs have 3GB of RAM, the 2070 GPUs with 2070 have 6GB of RAM.

This page last updated 30th January 2020. [Contacts/help]